I became an Amazon delivery goblin and barely lived to tell the tale

Fans of Lethal Company and R.E.P.O. will love Deadly Delivery's take on the "friendslop" formula.

Fans of Lethal Company and R.E.P.O. will love Deadly Delivery's take on the "friendslop" formula.

A new study by Google suggests that advanced reasoning models achieve high performance by simulating multi-agent-like debates involving diverse perspectives, personality traits, and domain expertise. Their experiments demonstrate that this internal debate, which they dub “ society of thought ,” significantly improves model performance in complex reasoning and planning tasks. The researchers found that leading reasoning models such as DeepSeek-R1 and QwQ-32B, which are trained via reinforcement learning (RL), inherently develop this ability to engage in society of thought conversations without explicit instruction. These findings offer a roadmap for how developers can build more robust LLM applications and how enterprises can train superior models using their own internal data. What is society of thought? The core premise of society of thought is that reasoning models learn to emulate social, multi-agent dialogues to refine their logic. This hypothesis draws on cognitive science, specifically the idea that human reason evolved primarily as a social process to solve problems through argumentation and engagement with differing viewpoints. The researchers write that "cognitive diversity, stemming from variation in expertise and personality traits, enhances problem solving, particularly when accompanied by authentic dissent." Consequently, they suggest that integrating diverse perspectives allows LLMs to develop robust reasoning strategies. By simulating conversations between different internal personas, models can perform essential checks (such as verification and backtracking) that help avoid common pitfalls like unwanted biases and sycophancy. In models like DeepSeek-R1, this "society" manifests directly within the chain of thought. The researchers note that you do not need separate models or prompts to force this interaction; the debate emerges autonomously within the reasoning process of a single model instance. Examples of society of thought The study provides tangible examples of how this internal friction leads to better outcomes. In one experiment involving a complex organic chemistry synthesis problem, DeepSeek-R1 simulated a debate among multiple distinct internal perspectives, including a "Planner" and a "Critical Verifier." The Planner initially proposed a standard reaction pathway. However, the Critical Verifier (characterized as having high conscientiousness and low agreeableness) interrupted to challenge the assumption and provided a counter argument with new facts. Through this adversarial check, the model discovered the error, reconciled the conflicting views, and corrected the synthesis path. A similar dynamic appeared in creative tasks. When asked to rewrite the sentence, "I flung my hatred into the burning fire," the model simulated a negotiation between a "Creative Ideator" and a "Semantic Fidelity Checker." After the ideator suggested a version using the word "deep-seated," the checker retorted, "But that adds 'deep-seated,' which wasn't in the original. We should avoid adding new ideas." The model eventually settled on a compromise that maintained the original meaning while improving the style. Perhaps the most striking evolution occurred in "Countdown Game," a math puzzle where the model must use specific numbers to reach a target value. Early in training, the model tried to solve the problem using a monologue approach. As it learned via RL, it spontaneously split into two distinct personas: a "Methodical Problem-Solver" performing calculations and an "Exploratory Thinker" monitoring progress, who would interrupt failed paths with remarks like "Again no luck … Maybe we can try using negative numbers," prompting the Methodical Solver to switch strategies. These findings challenge the assumption that longer chains of thought automatically result in higher accuracy. Instead, diverse behaviors such as looking at responses through different lenses, verifying earlier assumptions, backtracking, and exploring alternatives, drive the improvements in reasoning. The researchers reinforced this by artificially steering a model’s activation space to trigger conversational surprise; this intervention activated a wider range of personality- and expertise-related features, doubling accuracy on complex tasks. The implication is that social reasoning emerges autonomously through RL as a function of the model's drive to produce correct answers, rather than through explicit human supervision. In fact, training models on monologues underperformed raw RL that naturally developed multi-agent conversations. Conversely, performing supervised fine-tuning (SFT) on multi-party conversations, and debate significantly outperformed SFT on standard chains of thought. Implications for enterprise AI For developers and enterprise decision-makers, these insights offer practical guidelines for building more powerful AI applications. Prompt engineering for 'conflict' Developers can enhance reasoning in general-purpose models by explicitly prompting them to adopt a society of thought structure. However, it is not enough to simply ask the model to chat with itself. "It's not enough to 'have a debate' but to have different views and dispositions that make debate inevitable and allow that debate to explore and discriminate between alternatives," James Evans, co-author of the paper, told VentureBeat. Instead of generic roles, developers should design prompts that assign opposing dispositions (e.g., a risk-averse compliance officer versus a growth-focused product manager) to force the model to discriminate between alternatives. Even simple cues that steer the model to express "surprise" can trigger these superior reasoning paths. Design for social scaling As developers scale test-time compute to allow models to "think" longer, they should structure this time as a social process. Applications should facilitate a "societal" process where the model uses pronouns like "we," asks itself questions, and explicitly debates alternatives before converging on an answer. This approach can also expand to multi-agent systems, where distinct personalities assigned to different agents engage in critical debate to reach better decisions. Stop sanitizing your training data Perhaps the most significant implication lies in how companies train or fine-tune their own models. Traditionally, data teams scrub their datasets to create "Golden Answers" that provide perfect, linear paths to a solution. The study suggests this might be a mistake. Models fine-tuned on conversational data (e.g., transcripts of multi-agent debate and resolution) improve reasoning significantly faster than those trained on clean monologues. There is even value in debates that don’t lead to the correct answer. "We trained on conversational scaffolding that led to the wrong answer, then reinforced the model and found that it performed just as well as reinforcing on the right answer, suggesting that the conversational habits of exploring solutions was the most important for new problems," Evans said. This implies enterprises should stop discarding "messy" engineering logs or Slack threads where problems were solved iteratively. The "messiness" is where the model learns the habit of exploration. Exposing the 'black box' for trust and auditing For high-stakes enterprise use cases, simply getting an answer isn't enough. Evans argues that users need to see the internal dissent to trust the output, suggesting a shift in user interface design. "We need a new interface that systematically exposes internal debates to us so that we 'participate' in calibrating the right answer," Evans said. "We do better with debate; AIs do better with debate; and we do better when exposed to AI's debate." The strategic case for open weights These findings provide a new argument in the "build vs. buy" debate regarding open-weight models versus proprietary APIs. Many proprietary reasoning models hide their chain-of-thought, treating the internal debate as a trade secret or a safety liability. But Evans argues that "no one has really provided a justification for exposing this society of thought before," but that the value of auditing these internal conflicts is becoming undeniable. Until proprietary providers offer full transparency, enterprises in high-compliance sectors may find that open-weight models offer a distinct advantage: the ability to see the dissent, not just the decision. "I believe that large, proprietary models will begin serving (and licensing) the information once they realize that there is value in it," Evans said. The research suggests that the job of an AI architect is shifting from pure model training to something closer to organizational psychology. "I believe that this opens up a whole new frontier of small group and organizational design within and between models that is likely to enable new classes of performance," Evans said. "My team is working on this, and I hope that others are too."

AMD CIO Hasmukh Ranjan explains how data strategy, digital twins, and autonomous systems are reshaping enterprise AI at scale.

Reuters : Source: SpaceX and xAI are in talks to merge ahead of a planned IPO this year; under the proposed deal, shares of xAI would be exchanged for shares in SpaceX — Elon Musk's SpaceX and xAI are in discussions to merge ahead of a blockbuster public offering planned for later this year.

Kevin Maloy, vp of NexxenTV, Nexxen The 2026 FIFA World Cup is shaping up to be a landmark moment. Not only is it expanding to include more teams and arriving on North American soil, but it will also illustrate a new reality for premium television: Audiences can be massive and still hard to pin down. […]

Sarah Perez / TechCrunch : Appfigures: Sora's app downloads dropped 32% month-over-month in December and 45% in January to 1.2M; consumer spending on the app fell 32% MoM as of January — After rapidly hitting the top of the App Store in October, OpenAI's video-generation app Sora is now struggling.

A Gemini Live glitch has the assistant infinitely looping on Android Auto.

The Anker Soundcore Aeroclip boast great sound without cutting you off from the outside world.

Have you ever wished you could buy the stand or charger used to display the iPhone and other products inside Apple Stores? A website called AppleUnsold might be the best way to do that. more…

Apple has brought Pixelmator Pro to iPad, putting one of the best image editing apps into the Creator Studio bundle with support for Apple Pencil, and seamless syncing across Apple devices. The post Pixelmator Pro, one of the best image editing apps, finally lands on the iPad appeared first on Digital Trends .

Get an impressive charger for the price of a cheap lunch.

A new joint investigation by SentinelOne SentinelLABS, and Censys has revealed that the open-source artificial intelligence (AI) deployment has created a vast "unmanaged, publicly accessible layer of AI compute infrastructure" that spans 175,000 unique Ollama hosts across 130 countries. These systems, which span both cloud and residential networks across the world, operate outside the

This past summer, Google DeepMind debuted Genie 3 . It’s what’s known as a world world, an AI system capable of generating images and reacting as the user moves through the environment the software is simulating. At the time, DeepMind positioned Genie 3 as a tool for training AI agents. Now, it’s making the model available to people outside of Google to try with Project Genie . To start, you’ll need Google’s $250 per month AI Ultra plan to check out Project Genie . You’ll also need to live in the US and be 18 years or older. At launch, Project Genie offers three different modes of interaction: World Sketching, exploration and remixing. The first sees Google’s Nano Banana Pro model generating the source image Genie 3 will use to create the world you will later explore. At this stage, you can describe your character, define the camera perspective — be it first-person, third-person or isometric — and how you want to explore the world Genie 3 is about to generate. Before you can jump into the model’s creation, Nano Banana Pro will “sketch” what you’re about to see so you can make tweaks. It’s also possible to write your own prompts for worlds others have used Genie to generate. One thing to keep in mind is that Genie 3 is not a game engine. While its outputs can look game-like, and it can simulate physical interactions, there aren’t traditional game mechanics here. Generations are also limited to 60 seconds, as is the presentation, which is capped at 24 frames per second and 720p. Still, if you’re an AI Ultra subscriber, this is a cool opportunity to see the bleeding edge of what DeepMind has been working over the past couple of years. This article originally appeared on Engadget at https://www.engadget.com/ai/googles-project-genie-lets-you-generate-your-own-interactive-worlds-183646186.html?src=rss

Conor Murray / Forbes : Social media app UpScrolled hits #1 on Apple's US app store following allegations that TikTok suppresses anti-ICE videos; its founder says it crossed 1M users — Topline — Social media app UpScrolled ranks No. 1 on Apple's U.S. app store as of Thursday as it capitalizes on user discontent with TikTok …

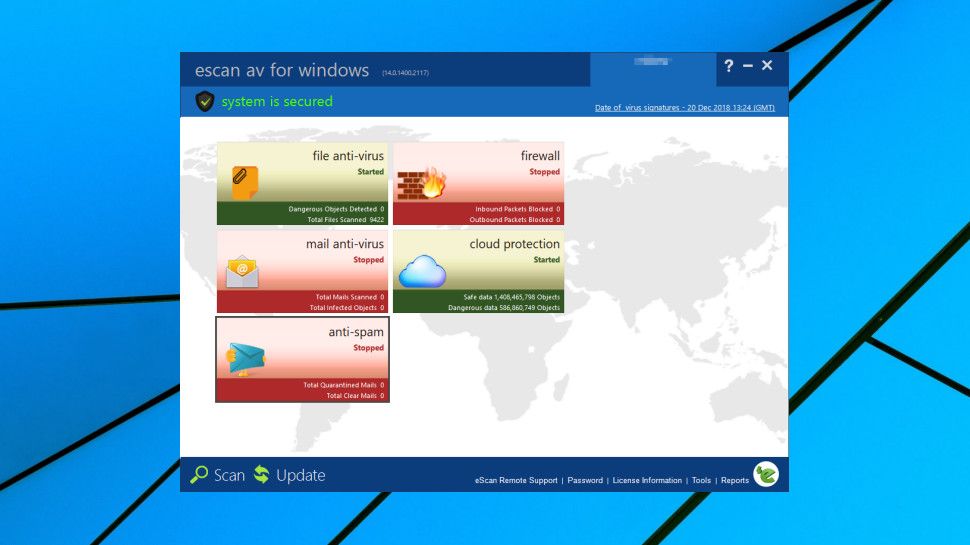

Popular antivirus program eScan was used as a malware launchpad, deploying a backdoor to a small subset of users.

Apple's M4 MacBook Air is eligible for a $150 discount during Amazon's month-end sale, with several models to choose from. Get an M4 MacBook Air for as low as $849 with month-end-deals - Image credit: Apple The $150 markdown at Amazon brings the standard M4 MacBook Air 13-inch down to $849.99 in the popular Midnight finish. Buy M4 MacBook Air for $849.99 Continue Reading on AppleInsider | Discuss on our Forums